Handwritten Digit Recognition with Projected Quantum Kernels

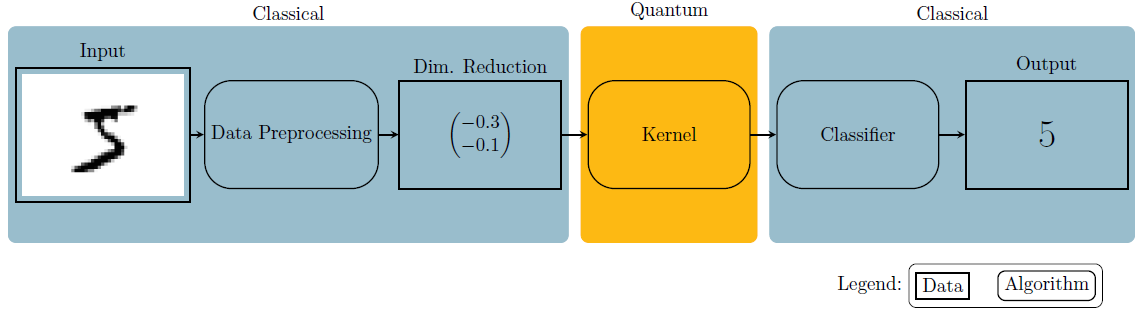

In this notebook, images of handwritten digits are classified by a quantum computer. Quantum machine learning methods promise advantages over conventional algorithms because they can map data into an exponentially large state space. However, the size of the space can also have disadvantages. In this notebook, the data is projected back into a classical space after being mapped into the Hilbert space, in order to take advantage of the benefits of QML without its drawbacks.

This notebook will make use of sQUlearn’s implementations of Quantum Support Vector Classification squlearn.kernel.QSVC and Projected Quantum Kernels squlearn.kernel.ProjectedQuantumKernel.

The data set used here is well known from conventional machine learning and easily solvable with conventional methods. The workflow that that is applied to solve the classification with a quantum computer is representative for this kind of task and can be transferred to more complex data sets.

Fig. 1: Pipeline used in this notebook.

Let’s start off by doing some imports and defining helper functions.

Imports and Definitions

[1]:

import matplotlib.pylab as plt

from matplotlib.lines import Line2D

import numpy as np

from sklearn.datasets import load_digits

from sklearn.manifold import TSNE

from sklearn.metrics import accuracy_score

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import MinMaxScaler

from squlearn import Executor

from squlearn.encoding_circuit import ChebyshevPQC

from squlearn.kernel.qsvc import QSVC

from squlearn.kernel.lowlevel_kernel import ProjectedQuantumKernel

The definitions of the helper functions are hidden on the website for sake of readability. If you want to replicate this code, please see the definitions of the helper functions at the original notebook

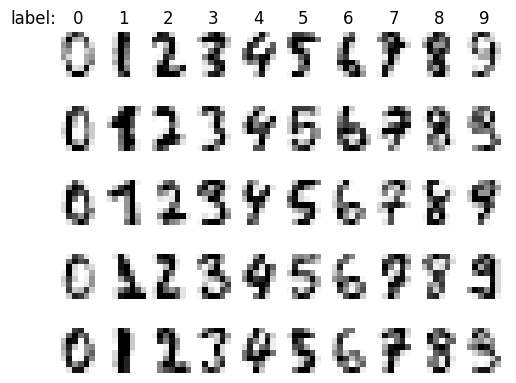

The Data

Classification of handwritten digits is a widely known task in machine learning. We utilize the data set included in scikit-learn. The data set is comprised of pictures of the size \(8 \times 8\) pixels that contain one single digit each as well as their according label, the numeric digit, depicted. We start by loading the data set and display the data.

[3]:

X, y = load_digits(return_X_y=True)

The input data is stored in row vectors of dimension \(64 \left(= 8 \times 8\right)\), one for each pixel, and the class label is a numeric value between \(0\) and \(9\). Let us continue to visualize some samples from the data set.

[4]:

plot_dataset(X, y, rows=5)

Preprocessing

To make the data readable by the machine learning model, we need to perform a couple of preprocessing step, starting with dimensionality reduction.

Dimensionality Reduction

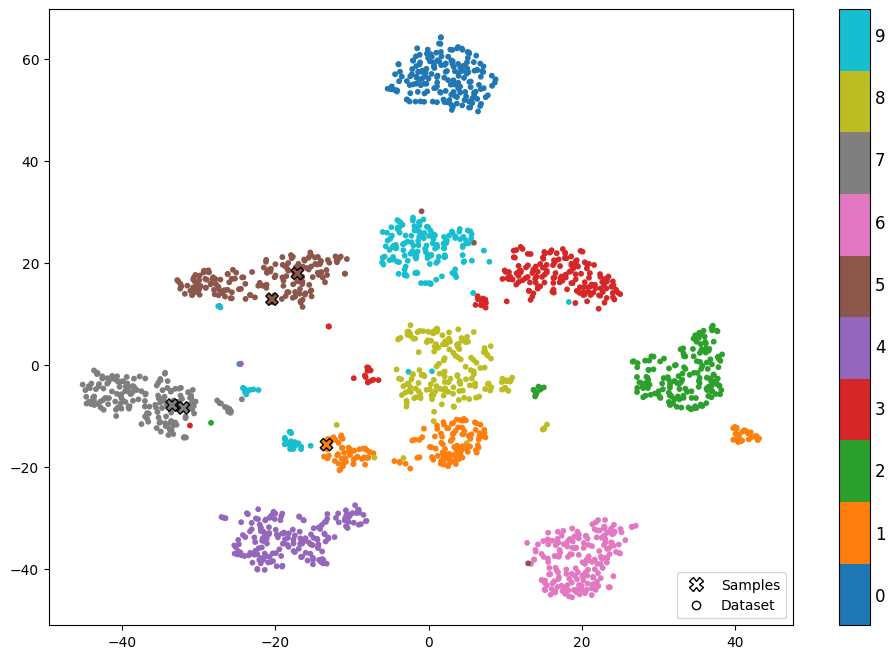

State of the art quantum computers can perform computations on a limited set of qubits. Also simulating them on classical hardware is only possible for a few such qubits. Handling our \(64\) input features (one for every pixel) would result in either a very wide (not possible yet) or very deep (very noisy) quantum circuit for our encoding circuit. Therefore we perform dimensionality reduction in form of T-SNE. This leaves us with two features for every input image.

[5]:

X_tsne = TSNE(n_components=2, learning_rate="auto", init="random", perplexity=40).fit_transform(X)

We can now plot the data in the feature space.

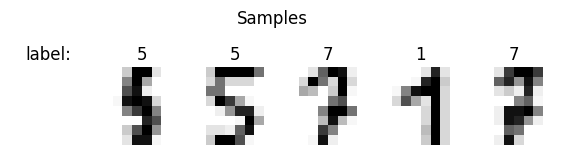

Hint: Rerun the cell a couple of times to view different samples and their respective position in feature space.

[6]:

rows = np.random.choice(np.arange(1000, X.shape[0]), 5, replace=False)

plot_numbers(X[rows, :], y[rows], title="label:")

plot_data_in_feature_space(X_tsne, y, highlight_rows=rows)

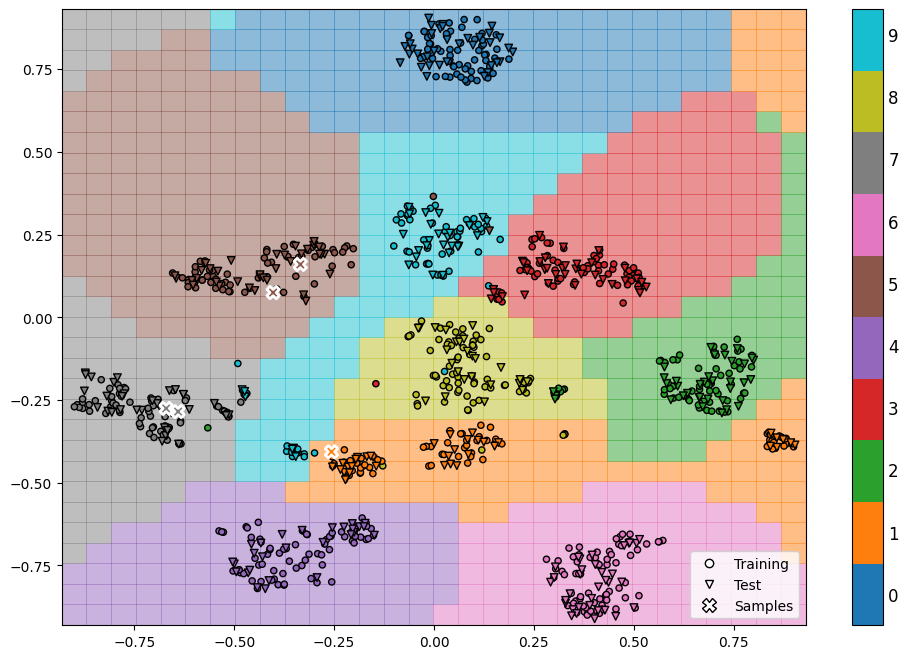

We can clearly see the different clusters of numbers in the new feature space. We also see the location of our samples marked by 🞭 with their true label.

Split Data sets

Lastly we select \(n\) samples and split the data set into one for training and one for testing.

[7]:

n_samples = 1000

X_train, X_test, y_train, y_test = train_test_split(

X_tsne[:n_samples, :],

y[:n_samples],

test_size=0.33,

random_state=42,

)

Min Max Scaling

Next we continue to scale the data to be in the interval \(\left[-0.9, 0.9\right]\) for both dimensions. This improves performance of the machine learning model by not overly considering one of the features.

[8]:

scaler = MinMaxScaler((-0.9, 0.9))

X_train = scaler.fit_transform(X_train)

X_test = scaler.transform(X_test)

Classification

We are now set to continue learning a model to classify the numbers.

Quantum Kernel Methods

Kernel methods are a set of powerful techniques used in machine learning for solving various problems, such as classification, regression, and clustering.

Fig. 2: Example of a encoding circuit.

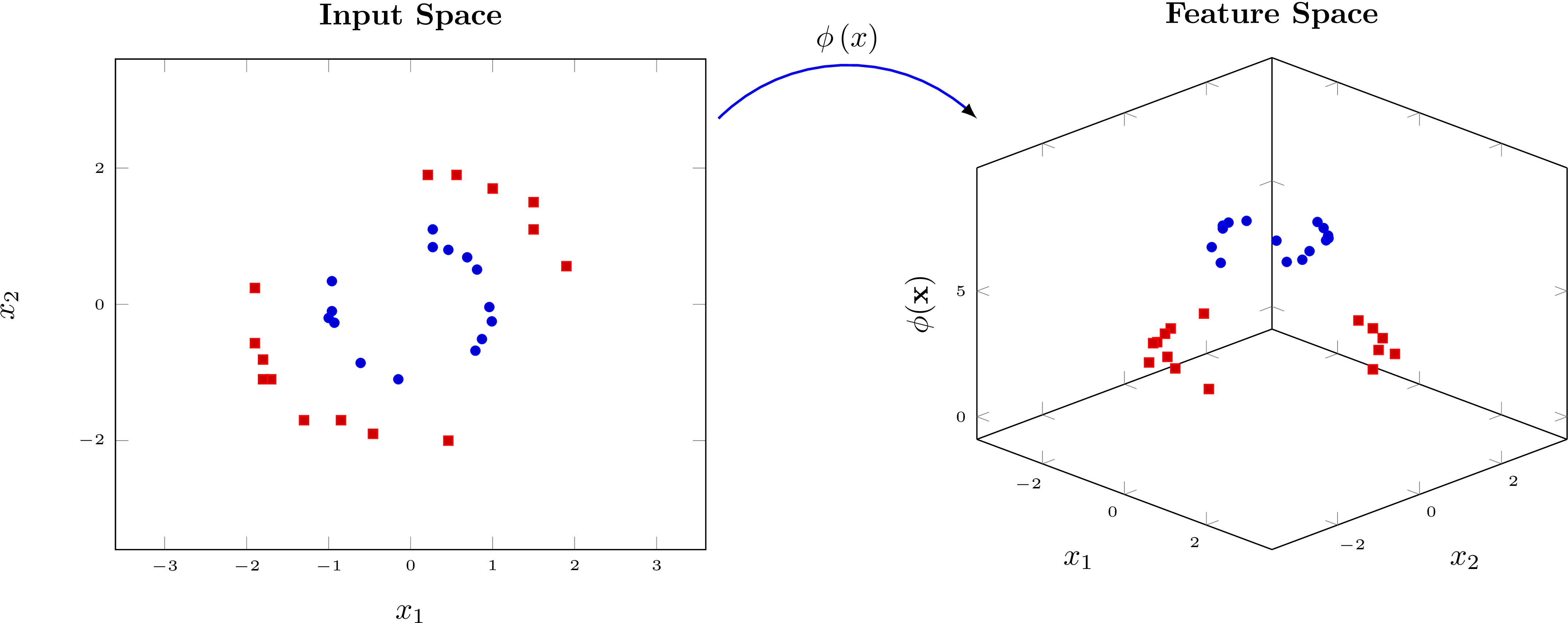

The core idea behind kernel methods is to transform the input data \(x\) into a high-dimensional feature space, where it becomes easier to separate or classify the data. The figure above shows an example for such a transformation \(\phi\left(x\right)\). It’s not possible to separate the data on the left with a line but we can separate the data on the right with a hyperplane. We continue to calculate the similarity between two data points \(x\) and \(y\) in the high-dimensional space by evaluating the scalar product \(\langle \phi\left(x\right), \phi\left(y\right) \rangle\). The classical kernel trick allows us to directly compute the similarity between the data points without explicitly calculating the encoding circuits.

Quantum Kernels

Quantum kernels leverage parameterized quantum circuits (PQC) to map an input \(x\) to a quantum state \(\ket{\phi\left(x\right)}\) in a potentially high dimensional quantum Hilbert space. In this case, the encoding circuit (cf. Fig. 2) is the data encoding map. We obtain a quantum kernel by measuring the similarity between the wave functions created by encoding two different values \(x\) and \(y\)

Calculating quantum kernels by encoding the features separately has several drawbacks. For example, each element of the kernel matrix has to be calculated separately, such that the overall calculation of the kernel matrix scales quadratically with the size of the data set. In this demonstrator, we projected quantum kernels instead. They have some intriguing properties.

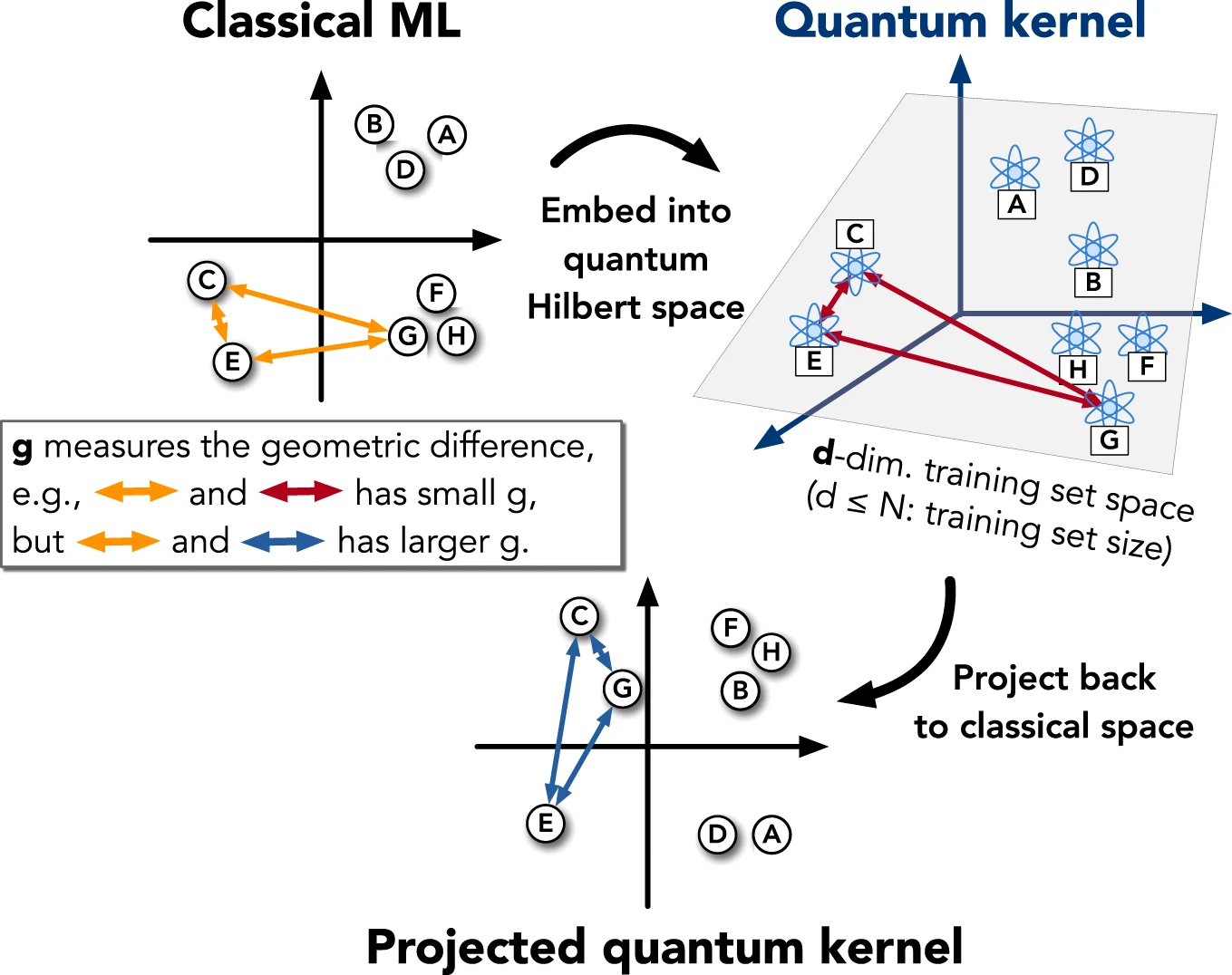

Projected Quantum Kernel

With large effective dimensions (the dimension of the feature space), quantum kernels will see all data far from each other and thus have bad learning performance. Projected quantum kernels alleviate this problem by projecting the feature back to a classical space and computing the kernel there. This also comes with the advantage of needing to encode each input into quantum Hilbert space only once which leads to a linear scaling with the number of data points.

Fig. 3: Visualization of a projected quantum kernel. Reprinted from Huang, HY., Broughton, M., Mohseni, M. et al. Power of data in quantum machine learning. Nat Commun 12, 2631 (2021). https://doi.org/10.1038/s41467-021-22539-9, licensed under CC BY 4.0.

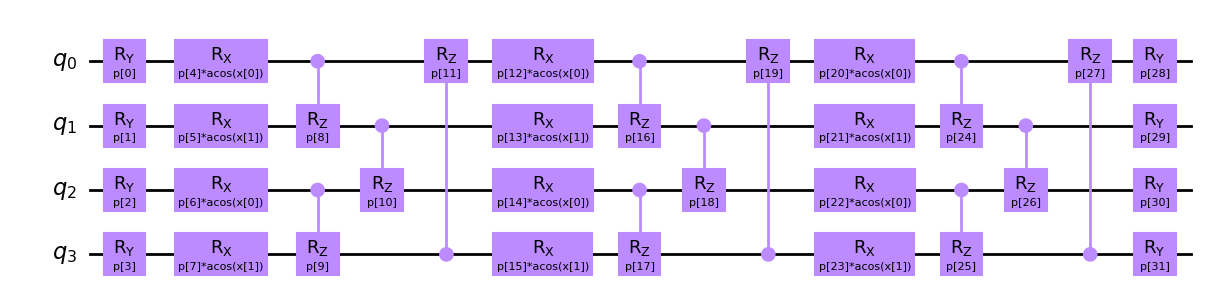

In this notebook, we encode the classical data into a quantum computer using a PQC with four qubits and three data-encoding layers. Each layer applies rotations \(\mathrm{R}_\mathrm{X}\), using the product of a trainable parameter with the \(\arccos\) of the input as the rotation angle and is followed by a circular entanglement layer using parameterized controlled \(\mathrm{R}_\mathrm{Z}\) gates. The layers are enclosed in between two layers of parameterized \(\mathrm{R}_\mathrm{Y}\) rotations. We will use a parameter vector \(x\) for the input data and a parameter vector \(p\) for the trainable parameters.

Let’s plot the resulting quantum circuit.

[9]:

encoding_circuit = ChebyshevPQC(num_qubits=4, num_layers=3)

encoding_circuit.draw(output="mpl", num_features=2)

[9]:

Note that since we only have a two-dimensional input \(x\) but twelve encoding quantum gates, we will repeat the data for the gates in ascending layer and qubit order. The resulting model is thus called a data re-uploading model. Each gate has their own trainable parameter, leaving us with \(32\) trainable parameters.

Let’s use the PQC to create a kernel matrix. We will measure in \(\mathrm{X}\), \(\mathrm{Y}\) and \(\mathrm{Z}\) direction on each qubit. This maps the embedded data back to a classical feature space of dimension \(12\). Furthermore, the projected quantum kernel will use a Gaussian outer kernel, i.e. it will compute each matrix element as

where \(\left\lvert\cdot\right\rvert_2\) corresponds to the \(L_2\)-norm for vectors. \(\gamma\) is a parameter which we will fix to \(0.5\). Note again, that we only need to compute each encoding circuit \(x_i\mapsto\mathrm{PQC}\left(\theta,x_i\right)\) once.

[10]:

kernel = ProjectedQuantumKernel(

encoding_circuit=encoding_circuit,

executor=Executor(),

measurement="XYZ",

outer_kernel="gaussian",

initial_parameters=np.random.rand(encoding_circuit.num_parameters),

gamma=0.5,

)

Support Vector Machine

We are now ready to train a Support Vector Machine (SVM) with our quantum kernel.

A Support Vector Machine (SVM) is a machine learning algorithm used for classification or regression tasks. It works by finding a hyperplane that separates data points into different categories. The hyperplane is chosen so that it maximizes the distance between the closest data points from each category. These closest points are called support vectors, and they help define the decision boundary. Once the decision boundary is established, new data points can be classified based on which side of the boundary they fall on.

Let’s now fit the SVM to our training data.

[11]:

qsvc = QSVC(quantum_kernel=kernel)

qsvc.fit(X_train, y_train)

[11]:

QSVC(C=1.0, break_ties=False, cache_size=200, class_weight=None,

decision_function_shape='ovr', max_iter=-1, probability=False,

quantum_kernel=<squlearn.kernel.lowlevel_kernel.projected_quantum_kernel.ProjectedQuantumKernel object at 0x000001A97FEAF5B0>,

random_state=None, shrinking=True, tol=0.001, verbose=False)In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook. On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

QSVC(C=1.0, break_ties=False, cache_size=200, class_weight=None,

decision_function_shape='ovr', max_iter=-1, probability=False,

quantum_kernel=<squlearn.kernel.lowlevel_kernel.projected_quantum_kernel.ProjectedQuantumKernel object at 0x000001A97FEAF5B0>,

random_state=None, shrinking=True, tol=0.001, verbose=False)We check its performance on the training and test data.

[12]:

predictions = qsvc.predict(X_train)

print(f"Train accuracy score {accuracy_score(y_train, predictions)}")

predictions = qsvc.predict(X_test)

print(f"Test accuracy score {accuracy_score(y_test, predictions)}")

Train accuracy score 0.9701492537313433

Test accuracy score 0.9787878787878788

The accuracy score describes the share of correctly classified data points. In multiclass classification it is calculated as

We can go back to the previous plot we created and add more information to it. Specifically, we will highlight which data points were used for training and testing, and we will also show the true labels for each data point. To help us understand how the SVM makes its decisions, we will add a line on the plot called the decision boundary. Additionally, the colors of the background in the plot will indicate the region where the SVM assigns a specific label to each data point.

[13]:

plot_results(

clf=qsvc,

X_train=X_train,

y_train=y_train,

X_test=X_test,

y_test=y_test,

X_highlight=scaler.transform(X_tsne[rows]),

y_highlight=y[rows],

X_range=[(-0.9, 0.9), (-0.9, 0.9)],

resolution=30,

)

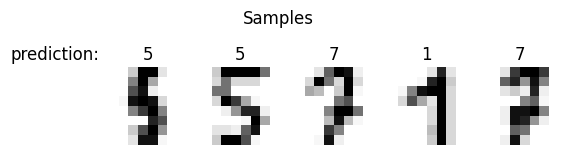

Finally let’s see how our model predicts the data samples we chose in the beginning.

[14]:

y_pred = qsvc.predict(scaler.transform(X_tsne[rows, :]))

plot_numbers(X[rows, :], y_pred, title="prediction:")